Many years ago, as a senior computer science student, I spent my days browsing through various job postings online, hoping to find a suitable internship position as a programmer.

In addition to intern positions, I would occasionally click on the ads for "senior engineer" positions. Looking back on those ads now, what struck me most, besides the dazzling technical jargon, was the often first-line requirement for years of experience: "This position requires 5+ years of experience".

As a complete novice who had never worked a day in the field, these experience requirements seemed excessive. But while I was feeling a bit discouraged, I couldn't help but fantasize, "A programmer with five years of experience must be really impressive, right? Is writing code as easy as eating cookies for them?"

Time flies, more than a decade has passed in the blink of an eye. Looking back now, I find myself a proud programmer with 14 years of experience. After years of fighting through the trenches of the software development industry, I've come to realize that many aspects are quite different from what I imagined during my senior year of college, for example:

- Programming doesn't get much easier with experience, the idea that it's "as easy as eating cookies" only happens in dreams.

- Writing code for many "big projects" is not only uninteresting, it's dangerous, much less fun than solving an algorithmic problem on LeetCode.

- Thinking only from a technical perspective doesn't make you a good programmer, some things are much more important than technology.

Upon reflection, there are many more such insights about programming. I've summarized eight of them in this article. If any of them resonate with you, I would be very pleased.

1. Writing code is easy, but writing good code is hard

Programming used to be a highly specialized skill with a high barrier to entry. In the past, if an average person wanted to learn programming, the most common approach was to read books and documentation. However, most programming books were quite abstruse and unfriendly to beginners, causing many to give up before they could ever enjoy the fun of programming.

But now, learning to code is becoming more accessible. Learning no longer means plowing through textbooks, instead, there are many new ways to learn. Watching tutorial videos, taking interactive courses on Codecademy, or even playing coding games on CodeCombat - everyone can find a learning method that suits them.

Programming languages are also becoming more user-friendly. Classic languages like C and Java are no longer the first choice for most beginners, and many simpler, more accessible dynamic languages are now popular. The IDEs and other tools have also improved. Together, these factors lower the learning curve for programming.

In short, programming has shed its mystical aura, transforming from an arcane skill mastered by a select few to a craft that anyone can learn.

But a lower barrier to entry and friendlier programming languages don't mean that anyone can write good code. If you have been involved in any "enterprise" software projects, let me ask you a question: "What is the quality of the code in the projects you work on every day? Is there more good code or more bad code?"

I'm not sure what your answer is, but let me share mine.

Good code is still rare

In 2010, I changed jobs to work for a large Internet company.

Before joining this company, I had only worked in start-ups with about ten people, so I had high expectations from my new employer, especially in terms of software quality. I thought to myself, "Considering this is a 'big' project supporting products used by millions of users, the code quality has to be much better than what I've seen before!"

It took me only a week at the new company to realize how far off the mark I was. The code quality of the so-called "big" project was far from what I had expected. When I opened the IDE, functions consisted of hundreds of lines of code and mysterious numeric literals were everywhere, making the development of even the smallest feature seem Herculean.

After that, as I worked in more companies and saw more software projects, I came to understand a truth: No matter how big the company or how impressive the project, encountering good code in practice is still a rare event.

What is good code?

Let's go back to the question of what exactly defines good code. A quote from Martin Fowler is often cited in this context:

"Any fool can write code that a computer can understand. Good programmers write code that humans can understand."

I believe this statement can serve as a starting point for evaluating good code: it must be readable, understandable, and clear. The first principle of writing good code is to put the human reader first.

Beyond readability, there are many other dimensions to consider when evaluating code quality:

- Adherence to the programming language: Does it use the recommended practices of the current programming language? Are language features and syntactic sugars used appropriately?

- Ease of Modification: Does the code design account for future changes, and is it easy to modify when those changes occur?

- Reasonable API Design: Is the API design reasonable and easy to use? A good API is convenient for simple scenarios and can be extended as needed for advanced use cases.

- Adequate Performance: Does the code performance meet current business needs, with room for improvement in the future?

- Avoidance of Overdesign: Does the code suffer from overdesign or premature optimization?

- ...

In short, for programmers at any level, good code doesn't come easy. Writing good code requires a delicate balance across multiple dimensions, meticulous design, and continuous refinement.

Given this, is there a shortcut to mastering the craft of coding?

The shortcut to writing good code

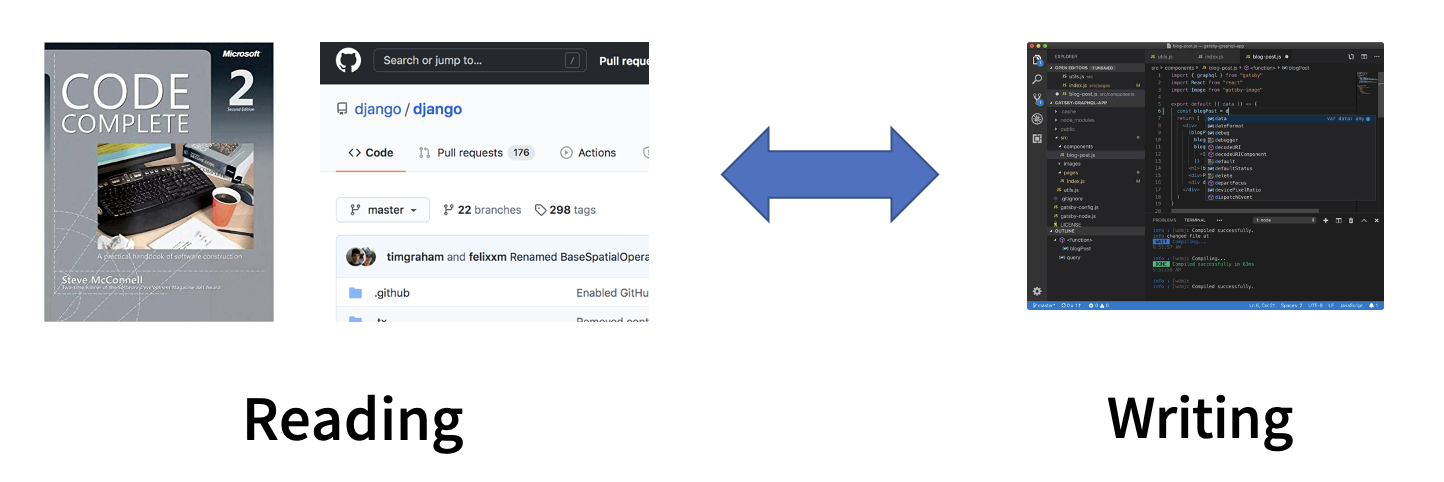

In many ways, I think programming is a lot like writing. Both involve using text and symbols to convey ideas, albeit in slightly different ways.

When it comes to writing, I like to ask a question about writers: "Have you ever heard of a writer who doesn't read? Have you ever heard of a writer who claims to read only his own work and not the work of others?" My guess is that the answer is probably NO.

if you do some research, you'll find that many professional writers spend their days in a constant cycle of reading and writing. They spend a significant amount of time each day reading a variety of texts and then writing.

As "wordsmiths," programmers often neglect reading. However, reading is an essential part of quickly improving your programming skills. In addition to the projects we encounter in our daily work, we should read more classic software projects to learn about API design, module architecture, and code-writing techniques.

Not only code and technical documents, it's also beneficial to read programming books regularly to maintain the habit of reading. In this regard, I believe Jeff Atwood's article, "Programmers Don't Read Books -- But You Should", written 15 years ago, is still relevant today.

The shortcut to improving programming skills is hidden in the endless cycle of "Reading <-> Programming".

2. The essence of programming is "creating"

In the daily work of a programmer, many things can fill you with a sense of accomplishment and even make you involuntarily exclaim, "Programming is the best thing in the world!" For example, fixing an extremely difficult bug, or doubling code performance with a new algorithm. But of all these accomplishments, none can compare to the act of creating something with your own hands.

When you're programming, opportunities to create new things are everywhere. Because creating isn't just about releasing a new piece of software. Writing a reusable utility function or designing a clear data model all fall under the category of creating.

For programmers, maintaining a passion for "creating" is crucial because it can help us to:

- Learn more efficiently: The most effective way to learn a new technology is to build a real project with it. Learning through the process of creation yields the best results.

- Encounter extraordinary things: Many world-changing open source software projects were originally started by their authors out of pure interest, such as Linus Torvalds with Linux and Guido van Rossum with Python.

While there are many benefits to "creating", and programmers have plenty of opportunities to engage in it, many often lack the awareness of being a "creator." This is similar to the widely told story about a philosopher who asked bricklayers what they were doing. Some were clearly aware they were building a cathedral, while others thought they were merely laying bricks. Many programmers are like the latter, seeing only the bricks, not the cathedral.

Once you start seeing yourself as a creator, your perspective on things can change drastically. For example, when adding error messages to an API, creators can escape the mental trap of "just getting the job done" and ask themselves more important questions: "What kind of product experience do I want to create for the user? What error messages will best help me achieve that goal?"

Like any useful programming pattern, the "creator mindset" can become an important driving force in your career. So now ask yourself: "What will my next creation be?

3. Creating an efficient trial-and-error environment is crucial

I was once involved in the development of an Internet product that was beautifully designed, feature-rich, and used by a massive number of users every day.

But despite its market success, the quality of the engineering was terrible. If you were to dive into its backend repository and check every directory, you wouldn't find a single line of unit test code, not to mention that other automated testing processes were out of the question. The business logic was extremely complex, resulting in a tangle of unexpected code dependencies. Developing a new feature often risked breaking existing functionalities.

As a result, both the developers and the product team had to be on high alert every time the project was released, creating a tense atmosphere. The release process was thrilling, and emergency rollbacks were common. Working in such an environment, one might not necessarily grow technically, but their psychological resilience would surely be tested.

Programming is supposed to be fun, but coding for such a project, the joy was nowhere to be found. What exactly takes the fun out of programming?

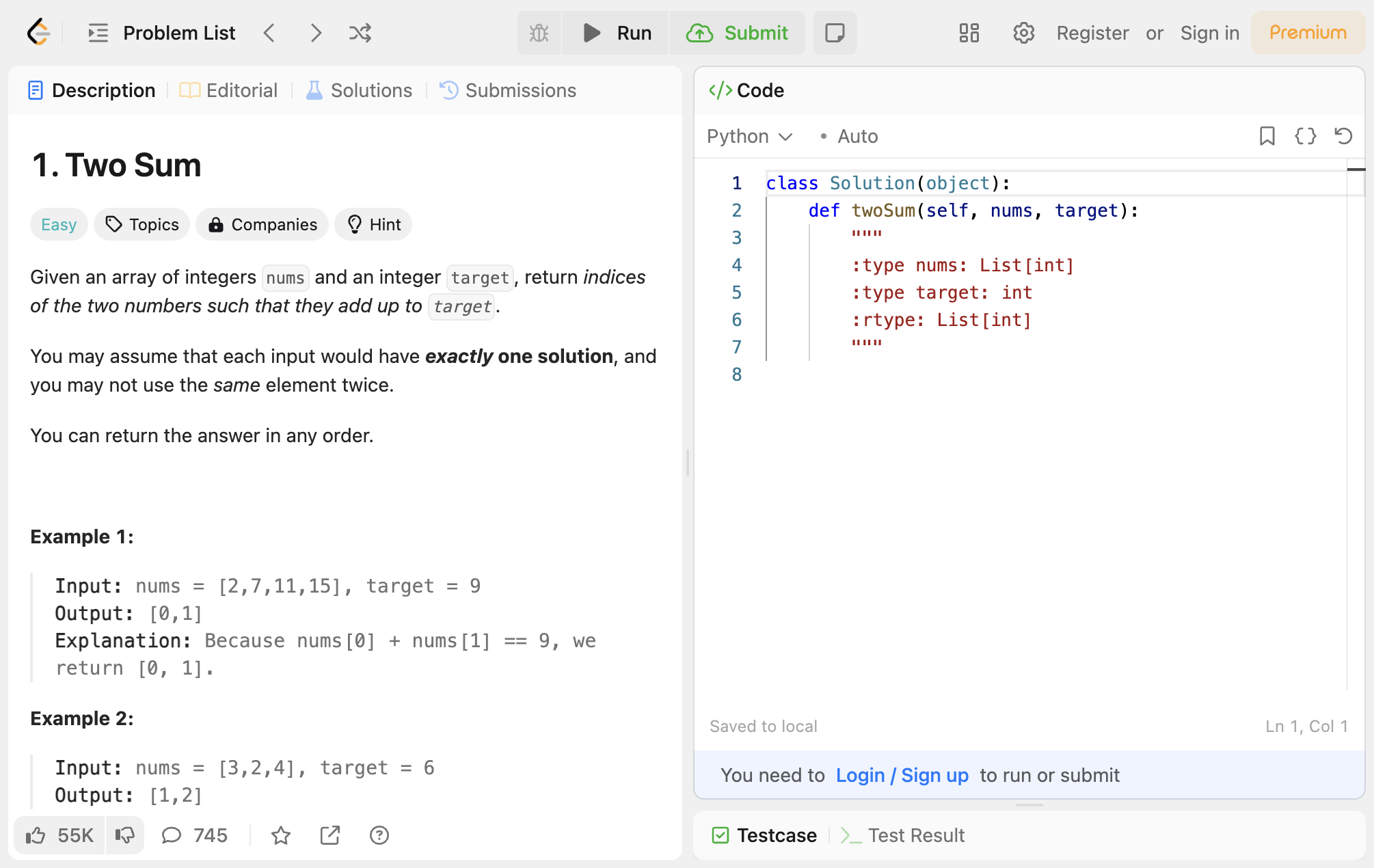

The ideal programming experience ≈ "solving LeetCode problems"

LeetCode is a well-known programming learning website that offers a lot of programming problems covering various levels of difficulty, most of which are algorithm-related. Users can select an interesting problem and code directly in the browser (supporting multiple programming languages) and execute it. If all test cases are passed, the solution is considered successful.

Solving problems on LeetCode is similar to playing a game—challenging and fun. The entire process perfectly exemplifies an idealized programming experience:

- Separation of concerns: Each problem is an independent entity, allowing developers to immerse themselves in one problem at a time.

- Fast and accurate feedback: After each code adjustment, developers can quickly get feedback from automated tests.

- Zero-cost trial and error: There are no negative consequences if the code has syntax errors or logical flaws, reducing mental load.

However, you in front of the screen might think I'm stating the obvious.

"So what? Isn't that how you solve LeetCode problems and write scripts? What's so special about that?" You might add, "Do you know how complex our company's projects are? They're huge in scale, with countless modules. Do you understand what I'm saying? Serving millions of users every day, with several databases and three types of message queues, of course, development is a bit more troublesome!"

Indeed, software development varies greatly and can't always be as straightforward and pleasant as solving problems on LeetCode. But that doesn't mean we shouldn't strive to improve the programming environment we're in, even if only a little bit.

To improve the programming experience by improving the environment, the concepts and tools available include:

- Modular thinking: Properly designing each module in the project to reduce coupling and increase orthogonality.

- Design principles: At the micro level, apply classic design principles and patterns such as the "SOLID" principles.

- Automated testing: Write good unit tests, use mocking techniques when appropriate, and cover critical business paths with automated testing.

- Shorten feedback loops: Switch to faster compiling tools, optimize unit test performance, and do everything possible to reduce the "code change to feedback" wait time.

- Microservice architecture: When necessary, break down a large monolith into multiple microservices with distinct responsibilities to disperse complexity.

- ...

Focusing on the programming environment and deliberately creating a "coding paradise" that allows for efficient trial and error can make work as enjoyable as solving LeetCode problems. It's one of the best contributions that experienced programmers can make to their teams.

4. Avoid the trap of coding perfectionism

Striving for excellence in code quality is commendable, but be careful not to fall into the trap of perfectionism. Coding is not an art form that encourages the endless pursuit of perfection. While a writer may spend years perfecting a timeless masterpiece, programmers who fixate on code to an extreme extent are problematic.

No code is perfect. Most of the time, as long as your code meets current needs and leaves room for future expansion, it's good enough. A few times I have seen candidates label themselves as "clean code advocate " on their resumes. While I can feel their commitment to code quality through the screen, deep down I hope that they have already left the trap of perfectionism far behind.

5. Technology is important, but people may be more important

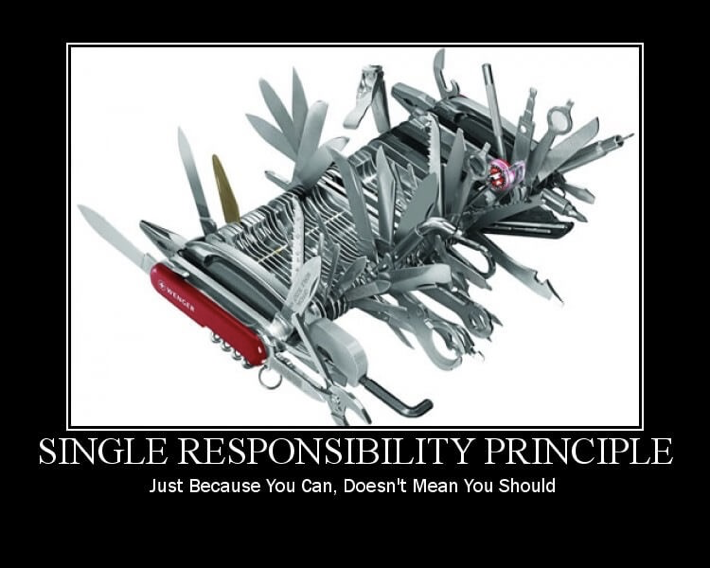

In software development, the Single Responsibility Principle (SRP) is a well-known design principle. Its definition is simple and can be summed up in one sentence: "Every software module should have only one reason to change."

To master the SRP, the key is to understand what defines a "reason to change". Clearly, programs are lifeless; they cannot and do not need to change on their own. Any reason to modify a program comes from the people associated with it – they are the true instigators of change.

Let's consider a simple example. Look at the two classes below, which one violates the SRP principle?

- A dictionary data class that supports two types of operations: storing data and retrieving data;

- An employee profile class that supports two types of operations: updating personal information and rendering a user profile card image.

To most people, the first example seems fine, but the second one clearly violates the SRP principle. This conclusion can be reached almost intuitively, without any rigorous analysis or proof. However, if we analyze it properly, the issue with the second example becomes apparent when we find two different reasons for modification:

- Management believes that the "personal phone" field in the profile cannot contain illegal numbers and requires the addition of simple validation logic.

- An employee feels that the "name" section on the profile card image is too small and wants to increase the font size.

"It is people who request changes. And you don’t want to confuse those people, or yourself, by mixing together the code that many different people care about for different reasons." — "The Single Responsibility Principle"

The key to understanding the SRP principle is to first understand people and the roles they play in software development.

Here's another example. Microservices architecture has been a hot topic in recent years. However, many discussions about it tend to focus only on the technology itself, overlooking the relationship between microservices architecture and people.

The essence of what differentiates microservices architecture from other concepts lies in the clearer boundaries between different modules after a large monolith is broken down into independent microservices. Compared to a large team of hundreds maintaining a monolithic system, many small organizations each maintaining their own microservices can operate much more efficiently.

Talking about the various technical benefits and the fancy features of microservices without the context of a specific organizational size (i.e., "people") is putting the cart before the horse.

Technology is undoubtedly important. As technical professionals, beautiful architectural diagrams and creative code naturally grab our attention. But, also make sure not to overlook "people," another critical factor in software development. When necessary, shift your perspective from "technology" to "people"; it can be significantly beneficial for you.

6. Studying is good, but learning method matters

Today, everyone is talking about "lifelong learning," and programmers are a profession that especially requires this continuous pursuit of knowledge. Computer technology evolves rapidly, and a framework or programming language that was popular three years ago may very well be outdated just a month ago.

To excel at their jobs, programmers need to learn a huge collection of topics spanning various areas. Taking the backend field, which I am more familiar with, as an example, a competent backend engineer should be proficient in at least the following:

One or more backend programming languages / Relational databases like MySQL / Common storage components like Redis / Design patterns / User experience / Software engineering / Operating systems / Networking basics / Distributed systems / …

Though there's a lot to learn, from my observations, most programmers actually love learning (or at least do not resist it), so mindset is not the issue. However, sometimes, just having an "eagerness to learn" isn't enough; when learning, we need to pay particular attention to the "cost-effectiveness" of our study.

Focusing on the cost-effectiveness of learning

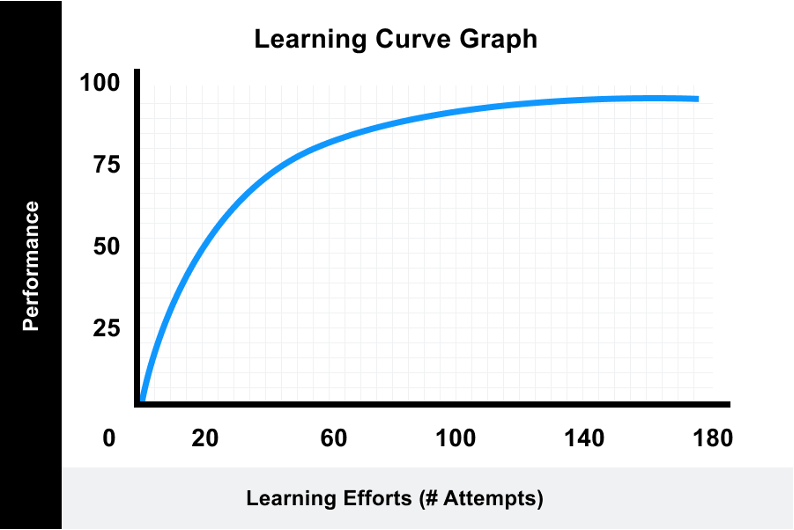

The following chart shows the relationship between learning outcomes and the effort invested.

The graph indicates that in the initial stages of learning, returns on relatively small investments grow rapidly. However, once outcomes exceed a certain threshold, the investment required to continue improving grows exponentially.

For this reason, I suggest that whenever you start learning something new, first clarify this question in your mind: “At what point on the graph should I stop?" rather than studying relentlessly.

The ocean of knowledge is limitless. Some things require years of continuous study and refinement, while others require only a touch-and-go to gain sufficient understanding. Accurately assessing and allocating your limited learning energy is sometimes even more important than the act of studying hard itself.

Choosing appropriate learning materials

Once you have set your learning goals, the next step is to find the right learning materials. I would like to share my own failure in this regard.

At one point, I developed a strong interest in product interaction design and felt that I needed to learn more about it. So, I carefully selected a classic book in the field, “About Face 4: The Essentials of Interaction Design”, and brought it home, confident that my interaction design skills would quickly improve.

However, things didn't go as planned. When I opened that classic, I found that I couldn't even get through the first chapter—there's truth in the saying, “Don't bite off more than you can chew”.

From this failure, I gleaned a piece of advice. When learning something new, it's best to choose materials that are more accessible and suitable for beginners, rather than just aiming for the most classic and authoritative ones.

Reflecting on past experiences, I believe the following books are very suitable for beginners and offer great value for money:

- “The Non-Designer's Design Book”: Related to design

- “Don't Make Me Think, Revisited”: Related to Web user experience

Perhaps everyone wants to be knowledgeable, to know everything. But the time and energy we can allocate are always limited; we can't and don't need to be experts in everything.

7. The sooner you start writing unit tests, the better.

I really, really like unit testing. I think that writing unit tests has had a profound impact on my programming career. To put it in a nutshell, if I use “starting to write unit tests” as a milestone, the latter part of my career is much more exciting than the former.

There are many benefits to writing unit tests, such as driving improvements in code design, serving as documentation for the code, and so on. Moreover, comprehensive unit testing is key to creating the “efficient trial-and-error environment” mentioned earlier.

I have written several articles about unit testing, so I won't repeat them here. Just one piece of advice: if you have never tried to write unit tests, or have never taken testing seriously, I suggest you start tomorrow.

8. What is the biggest enemy of programmers?

In most programmer jokes, product managers often appear as the villain. They constantly change project requirements, come up with new ideas every day, and leave the programmers in the lurch.

Fueled by these jokes, the image of the ever-changing product manager seems to have become the nemesis of programmers. It's as though if only the product requirements stopped changing, the work environment would instantly transform into a utopia.

While it's fun to occasionally gripe about product managers, I want to set the record straight: product managers are not the enemy.

From a certain perspective, software is inherently designed to be modified (why else would it be called "software"?). This makes developing software fundamentally different from building houses. After all, nobody would say after constructing a building, "Let's knock it down and rebuild it! The same structure but with 30% less steel and concrete!"

Therefore, product managers and unstable requirements are not the enemies of programmers. Moreover, the ability to write code that is easily modified and adapts to change is one of the key indicators of a great programmer versus a good one.

So what is the biggest enemy of programmers?

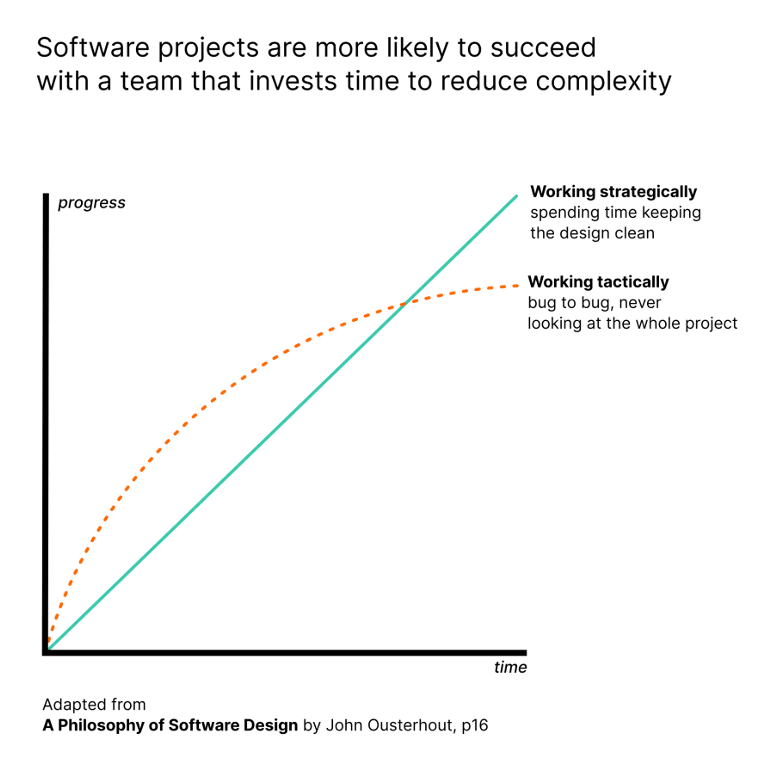

Complexity is the biggest enemy

As stated in "Code Complete", the essence of software development is complexity management. Uncontrolled complexity is a programmer's worst enemy.

Let's look at the factors that lead to ever-increasing project complexity:

- Constantly adding new features: More features means more code, and more code usually means more complexity.

- Demand for high availability: To achieve high availability, additional technical components(like message queues) and code are introduced.

- Demand for high performance: To improve performance, caching and related module code are added, and some modules are split and rewritten in faster languages.

- Repeatedly postponed refactoring: Due to tight project schedules, urgent refactoring is continuously postponed, accumulating a growing technical debt.

- Neglect of automated testing: No one writes unit tests or cares about testing.

- …

Eventually, as the project's complexity reaches a certain level, a loud crash echoes through the air. "Boom!" A massive "pitfall" that no one wants to tackle or dares to touch magically appears in everyone's IDE.

Guess who dug this hole?

The process of slowing complexity growth

While complexity will inevitably continue to grow, there are many practices that can slow this process. If everyone could do the following, complexity could be kept within reasonable limits over the long term:

- Master the current programming language and tools, write clean code

- Use appropriate design patterns and programming paradigms

- Have zero tolerance for duplicate code, abstract libraries, and frameworks

- Apply the principles of Clean Architecture and Domain-Driven Design properly

- Write good documentation and comments

- Develop high quality and effective unit tests

- Separate what changes from what doesn't

- ...

The list seems long, but in summary, the core message is: write better code.

In closing

In 2020, I gave a presentation to my team called "10 Insights After a Decade of Programming". After I shared the slides on the company intranet, a colleague saw them and commented that just reading the slides wasn't satisfying enough; she was hoping I could expand it into an article. I replied that I would. Now, 3 years have passed, and I've finally kept my promise.

When I was preparing the presentation, I had finished all the slides and had no idea what to put on the last page. Then, inspired, I went with a plain white background and typed in bold, big letters in the middle: "A decade is too short to master programming." Now, as I approach the midpoint of my second decade, I still find programming hard sometimes - I still have a lot to learn, I've got to keep going.

This post was originally written in Chinese link. I translated it to English with the help of GPT4. If you find any errors, please feel free to let me know.